Head of group: Jens Hjortkjær

The ACN group studies how the auditory brain represents and computes natural sounds like speech. We use neuroimaging (MRI) and electrophysiology (EEG) to measure human brain activity in order to understand how sound stimuli are transformed into auditory objects by the brain, and how auditory attention modulates these representations. We work to create new brain-based hearing technology that combines speech separation technology and neural decoding.

Auditory neuroscience of attention

Listening in everyday life with many sound sources requires attention. Focusing attention on one talker in a noisy room is difficult for listeners with hearing loss, even with a modern hearing aid. Yet, listeners with normal hearing effortlessly use attention to follow speech in complex acoustic environments, even when the relevant speech signal is severely degraded by noise. Our research focuses on understanding how the brain is able to do this remarkable task.

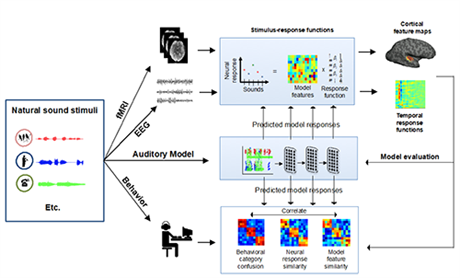

Our group develops quantitative models of electrophysiological and hemodynamic brain responses to natural sound signals, such as speech, environmental sounds, or music. We build stimulus-response models to ask what features of a sound signal are encoded by neural activity in different parts of the auditory system and how attention influences the encoding. We also use this framework to predict which sounds a listener is attending to based on recorded brain signals.

The auditory cognitive neuroscience group combines brain imaging, behavior and computational models to understand how natural sounds are encoded by the brain.

Selected publications:

• Fuglsang, S. A., Märcher-Rørsted, J., Dau, T., & Hjortkjær, J. (2020). Effects of sensorineural hearing loss on cortical synchronization to competing speech during selective attention. Journal of Neuroscience, 40(12), 2562-2572.

• Hjortkjær, J., Kassuba, T., Madsen, K., Skov, M., Siebner, H. (2017). Task-modulated cortical representations of natural sound source categories. Cerebral Cortex, 28 (1), 295-306

• Fuglsang, S., Dau, T., Hjortkjær, J (2017). Noise-robust cortical tracking of attended speech in real-world acoustic scenes. NeuroImage, 156, 435-444

Cognitive hearing technology

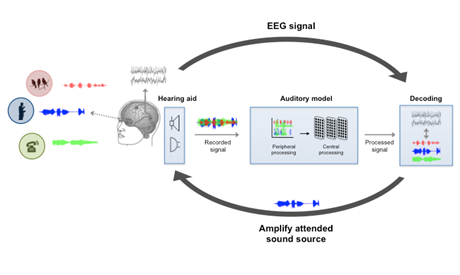

Since its invention, the hearing aid has been a device that amplifies sounds to make them audible to a person with hearing loss. In a room with many people talking at the same time, a conventional hearing aid does not know what sounds the listener wants to listen to. Future hearing technology may also process brain-signals or eye-signals from the user and decode them in order to amplify those sounds that are relevant to the hearing-aid user. With our collaborators (cocoha.org), we focus on combining AI speech separation technology and attention decoding from user sensors with the goal to create novel hearing assistive solutions. We combine attention decoding with existing speech separation technologies, but we also design new AI speech separation systems that directly use brain signals to extract the attended source signal from an acoustic mixture. Because the noisy nature of brain signals recorded by electrodes on the head or in the ear of a user, much of our efforts is devoted to optimizing the speed and robustness of brain decoding techniques.

The auditory cognitive neuroscience group works on novel attention-based hearing technology that selectively enhances the sounds that the listener wants to hear.

Selected publications:

• Wong, D. D., Fuglsang, S. A., Hjortkjaer, J., Ceolini, E., Slaney, M., & De Cheveigne, A. (2018). A Comparison of Regularization Methods in Forward and Backward Models for Auditory Attention Decoding. Frontiers in Neuroscience, 12, 531.

• de Cheveigné, A., Wong, D. D., Di Liberto, G. M., Hjortkjaer, J., Slaney, M., & Lalor, E. (2018). Decoding the auditory brain with canonical component analysis. NeuroImage, 172, 206-216.

• Ceolini, E., Hjortkjær, J., Wong, D. D., O’Sullivan, J., Raghavan, V. S., Herrero, J., ... & Mesgarani, N. (2020). Brain-informed speech separation (BISS) for enhancement of target speaker in multitalker speech perception. NeuroImage, 223, 117282.

• Dau, T., Märcher Roersted, J., Fuglsang, S., & Hjortkjær, J. (2018). Towards cognitive control of hearing instruments using EEG measures of selective attention. The Journal of the Acoustical Society of America, 143(3), 1744-1744.

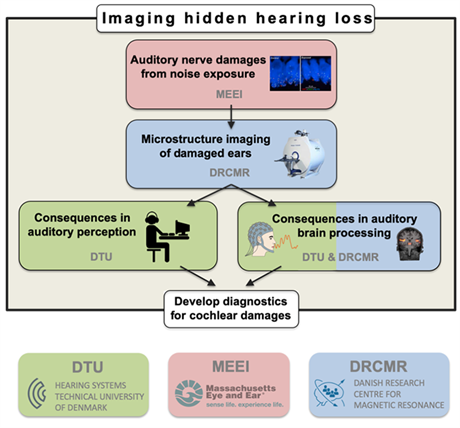

Hearing loss and the listening brain

Typical hearing loss originates in the ear, but consequences of hearing loss extents throughout the auditory system. The ACN group investigates how effects of hearing loss and aging throughout the auditory system can be measured and translated to diagnostics. In context of the UHEAL project collaboration, we focus on damages to the auditory nerve caused by exposure to noise or aging that are difficult to detect in standard audiometric screening (so-called ‘hidden hearing loss’). We use MR imaging and electrophysiology across species to detect these damages in the cochlea and track their consequences in central brain processing and behavior.