Teaching in courses:

In our group, we ask fundamental questions about how the auditory system codes and represents sound in everyday acoustic environments. Using computational auditory modeling, our major goal is to provide a description of the transformations of the acoustical input signal into its essential ‘internal’ representations in the brain. We consider both subjective aspects of listening experience (e.g. speech intelligibility, listening effort) as well as electrophysiology and neuroimaging to model the fundamental mechanisms that underlie the remarkable robustness of the normal-hearing auditory system in real-world situations. Furthermore, we aim to understand how these mechanisms are affected by hearing impairment. State-of-the-art models of hearing, fail to predict auditory perception in real-life situations, such as speech recognition in a busy restaurant. Thus, we do not fully understand the consequences of hearing loss in real-world listening. We argue that this is primarily due to a lack of knowledge about central representations of speech or other natural sounds. We try to connect the ‘missing link’ between existing models of the auditory periphery, where a hearing impairment usually arises, and the later brain stages that describe the consequences in everyday perception.

Modeling auditory signal processing and perception

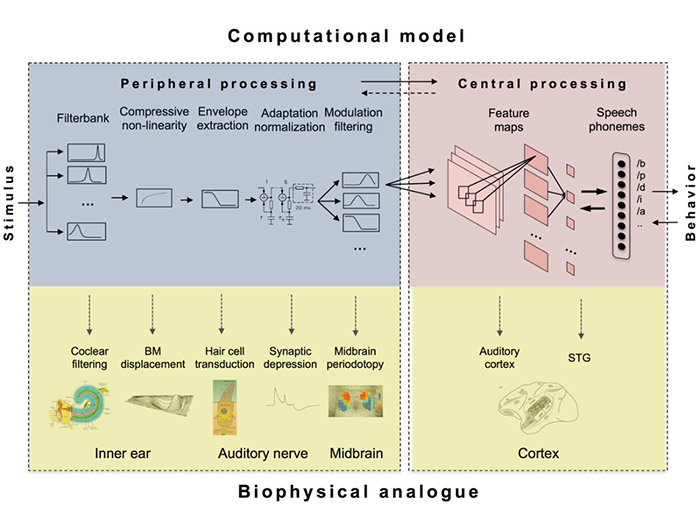

In this research stream, we focus on the quantitative prediction of behavioral outcome measures, such as signal-in-noise detectability, sound discrimination and identification abilities as well as speech intelligibility. The modeling is inspired by physiological knowledge about the signal processing (e.g. in the ear and the brainstem) but reflects a ‘systems’ perspective which focuses on the ‘effective’ signal transformations underlying auditory perception. The approach is to provide a computational model framework that allows predicting a large variety of behavioral data using the same set of parameters. A major motivation for our work is a better understanding of the behavioral consequences of different types of hearing loss through quantitative predictions, particularly in conditions with signals presented in noisy and/or reverberant conditions. Our perspective is to combine our auditory modelling with advances in deep learning, using neural networks to capture features in later ‘central’ stages that are relevant to tasks in real-life acoustic communication.

Figure 1: Auditory modeling framework: Blue: Existing computational model architecture of the human peripheral system (inner ear, auditory nerve, midbrain). Red: Model perspective, using artificial neural networks that simulates the cortical transformation of sounds into auditory objects, such as speech units. The model accepts any real-world stimuli and generates predictions at different processing stages. Yellow: Each stage corresponds to components of the biological system.

Selected publications:

- Relano-Iborra, H., Zaar, J., and Dau, T. (2019). “A speech-based computational auditory signal processing and perception model”. J. Acoust. Soc. Am. 146, 3306-3317.

- McWalter, R. I. and Dau, T. (2017). “Cascaded amplitude modulations in sound texture perception”. Frontiers in Neuroscience 11, 485.

- Zaar, J. and Dau, T. (2017). “Predicting consonant recognition and confusions in normal-hearing listeners”. J. Acoust. Soc. Am.141, 1051-1064.

- Jørgensen, S., Ewert, S.D. and Dau, T. (2013). “A multi-resolution envelope-power based model for speech intelligibility“, J. Acoust. Soc. Am. 134, 436-446.

- Jepsen, M.L., Dau,T. (2011) “Characterizing auditory processing and perception in individual listeners with sensorineural hearing loss”, J. Acoust. Soc. Am., 129 (1), 262-281.

Objective and behavioral correlates of individual hearing loss

The sources and consequences of a hearing loss can be diverse. While several approaches have aimed at disentangling the physiological and perceptual consequences of different etiologies, hearing deficit characterization and rehabilitation have been dominated by the results of pure-tone audiometry. In this research stream, we investigate novel approaches based on data-driven ‘profiling’ of perceptual and objective auditory deficits that attempt to represent auditory phenomena that are usually hidden by, or entangled with, audibility loss. The resulting ‘auditory distortions’ characterize an individual listener’s auditory profile and provide the basis for a more targeted, profile-based compensation strategy in hearing instruments. Such distortions are reflected, for example, in electrophysiological ear and brain potentials as well as behavioral measures of speech-in-noise perception and sound identification. The experimental findings, in turn, provide strong constraints for our computational models of auditory signal processing and perception.

Figure 2: Example of a measurement setup to record neural activity patterns in response to sound. In this particular case, the listener’s task is to selectively attend to one of several talkers in different acoustic scenarios reproduced in our virtual sound environment. A major focus is to analyze the effects of hearing loss on the sound’s representation in the ears and in the brain.

Selected publications:

• Sanchez Lopez, Fereczkowski, M., Neher, T., Santurette, S., and Dau, T. (2020). Robust data-driven auditory profiling towards precision audiology. Trends in Hearing, in press.

• Mehraei, G., Shinn-Cunningham, B., and Dau, T. (2018). “Influence of talker discontinuity on cortical dynamics of auditory spatial attention”. NeuroImage 179, 548-556.

• Hjortkjær, J., Märcher-Rørsted, J., Fuglsang, S. A., and Dau, T. (2018). ”Cortical oscillations and entrainment in speech processing during working memory load”. Eur. J. Neurosci. 51, 1279-1289.

• Fuglsang, S.A., Dau, T., and Hjortkjær, J. (2017). ”Noise-robust cortical tracking of attended speech in real-world acoustic scenes”. Neuroimage 156, 435-444.

Auditory-model inspired compensation strategies in hearing instruments

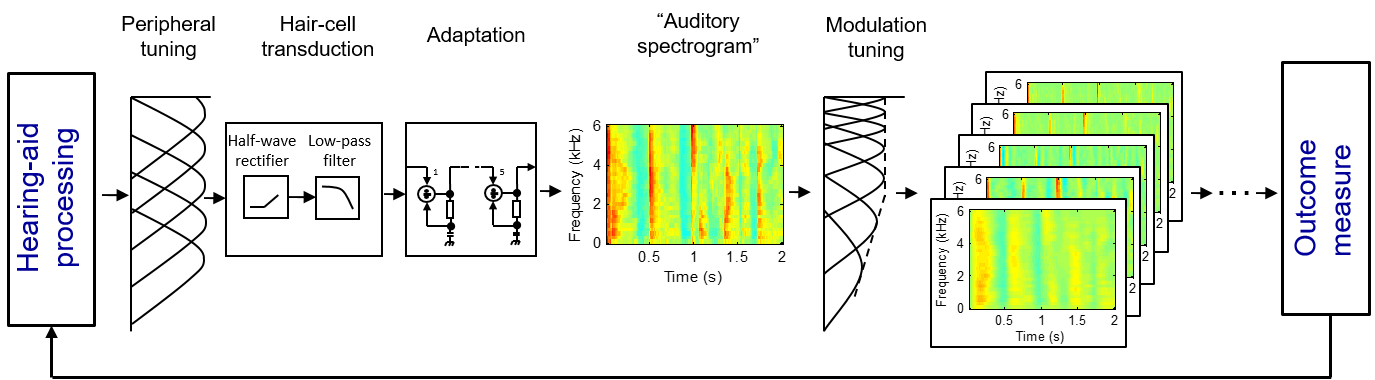

Computational models that integrate different levels of processing enable a new path for hearing-aid compensation strategies. Current compensation strategies in hearing instruments essentially aim to restore audibility and loudness perception, typically via level and frequency-dependent sound amplification schemes. Rather than focusing only on amplifying the peripheral input, where the hearing loss is typically located, we design new compensation schemes that attempt to optimize representations at later, more ‘central’, stages. Importantly, while restoring peripheral processing may not always be possible in a hearing aid, it may still be possible to find signal processing schemes that ‘repair’ the central representations. Restoring higher-level features related to, e.g., speech recognition or sound segregation is essential because these are behaviorally relevant to the listener in everyday communication. We argue that computational models that are able to describe how acoustic information is transformed into, e.g., speech categories will also be an important tool to understand the effects of individual hearing loss and to evaluate hearing-aid processing quantitatively.

Figure 3: Conceptual approach that reflects (i) the modeling of relevant perceptual outcome measures (e.g. speech intelligibility, sound quality) after processing through a transmission channel (in this case a hearing aid) and the normal and/or impaired auditory system and (ii) strategies for optimization of hearing-aid processing based on models of normal and impaired hearing.

Selected publications:

- Kowalewski, B., Fereczkowski, M., Strelcyk, O., MacDonald, E., and Dau, T. (2020). “Assessing the effect of hearing-aid compression on auditory spectral and temporal resolution using an auditory modeling framework”. Acoustical Science and Technology 41, 214-222.

- Zaar, J., Schmitt, N., Derleth, R.P., DiNino, M., Arenberg, J.A., and Dau, T. (2017). “Predicting effects of hearing-instrument signal processing on consonant perception”. Journal of the Acoustical Society of America 142, 3216-3226.

- Wiinberg, A., Jepsen, M.L., Epp, B. and Dau, T. (2018) “Effects of hearing loss and fast-acting hearing-aid compression on amplitude modulation perception and speech intelligibility”. Ear and Hearing.

- Wiinberg, A., Zaar, J. and Dau, T. (2018). “Effects of expanding envelope fluctuations on consonant perception in hearing-impaired listeners”. Trends in Hearing 22, 2331216518775293.

Speech perception and communication in complex environments

In everyday life, speech sounds are often mixed with many other sound sources as well as reverberation. This is commonly known as the “cocktail-party effect”, where a normal listener effortlessly segregates a single voice out of a room with many talkers. Conversely, hearing-impaired people have great difficulty understanding speech in such adverse conditions even when reduced audibility has been fully compensated for by a hearing aid. In this research stream, we investigate the perception of normal-hearing and hearing-impaired listeners in realistic sound environments. Furthermore, we investigate the benefit of hearing devices in scenarios that the listener actually experiences. Realistic sound scenes can be reproduced using virtual sound environments, such as the audio-visual immersion lab (AVIL).

One important element is to characterize critical acoustic scenarios that are representative of the difficulties faced by hearing-aid users in their daily lives. Current hearing-aid processing algorithms like beamforming, compression, de-reverberation and other processing algorithms are commonly challenged in such more complex situations with diffuse sound fields and multiple sound sources. In addition to the acoustical features, we also explore ‘ecologically valid’ tasks that more closely represent people’s real-life and communication behavior, also including audio-visual integration. We explore the ‘break-down’ points of communication as a function of the complexity of considered sound scenarios and aim at analyzing and predicting such behavior using models of aided signal processing and perception.

Figure 4. People’s behavior (e.g. when communicating) and the efficiency of the signal processing in hearing instruments strongly depend on the complexity of the acoustic scene around us. The reproduction of ecologically valid, yet fully controlled, acoustic scenes (e.g. at a meeting space or a simulated restaurant scenario) is crucial for our analysis of a listener’s challenges even with modern hearing instruments.

Selected publications:

- Ahrens, A., Marschall, A., and Dau, T. (2019). “Measuring and modeling speech intelligibility in real and loudspeaker-based virtual sound environments”. Hearing Research.

- Cubick, J., Buchholz, J.M., Best, V., Landandier, M., and Dau, T. (2018). “Listening through hearing aids affects spatial perception and speech intelligibility in normal-hearing listeners”. J. Acoust. Soc. Am. 144, 2896-2905.

- Ahrens, A., Marschall, M. and Dau, T. (2020). “The effect of spatial energy spread on sound image size and speech intelligibility”. J. Acoust. Soc. Am. 147, 1368-1378.

- Hassager, H.G., Wiinberg, A., and Dau, T. (2017) “Effects of hearing-aid dynamic range compression on spatial perception in a reverberant environment”. J. Acoust. Soc. Am. 141, 2556-2568.